OROFACE – Orofacial Gesture Training Tool

Vicomtech’s OROFACE digital solution is the functionality from Vicomtech’s Viulib library, for detecting emotions and gestures from facial images beyond the “basic” facial expressions, i.e., more “fine-grained” dimensional emotions, following the Valence-Arousal-Dominance (VAD) model, and other kinds of facial gestures that do not necessarily relate to facial emotions, usable for other kinds of applications, such as orofacial rehabilitation.

The VAD model maps emotional states to an orthogonal dimensional space, with measurable distances from one emotion to another. Since dimensional models pose an emotion as a real-valued vector in the space, it is likely to account for subtle emotional expressions compared to categorical models which employ a finite number of “basic” emotions. With dimensional VAD models, capturing fine-grained emotions could benefit clinical natural language processing (NLP) research, emotion regulation as psychotherapy research and other works in computational social science fields dealing with subtle emotion recognition.

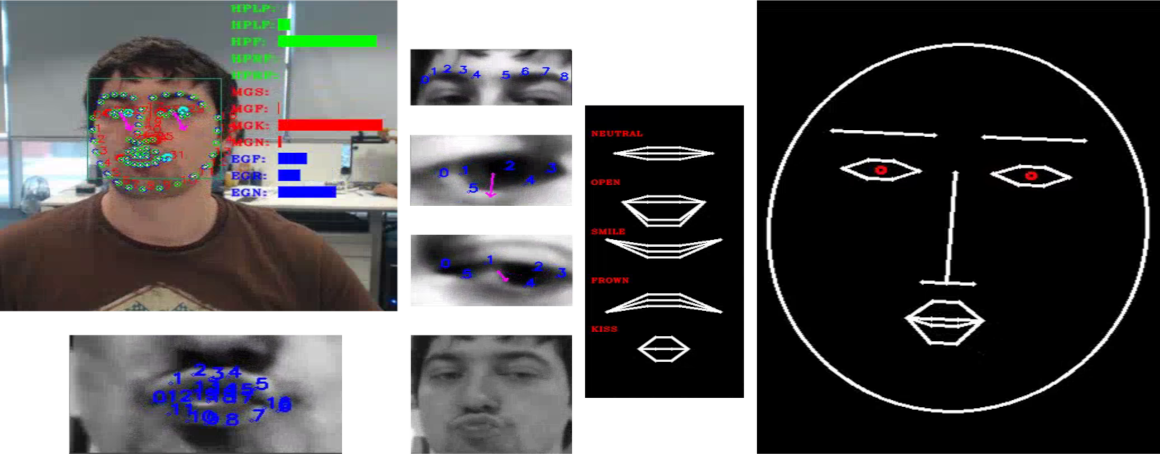

The involved image processing stages are the following:

- Individuals are detected in captured images “in the wild”, extracting facial landmarks and regions, if visible;

- Several facial attributes are estimated from one forward pass of a multi-modal, multi-level and multi-task Deep Neural Network (DNN), which allows the efficient deployment of the digital solution in low-resource devices.

The user’s privacy is totally preserved as no facial images are stored at any moment.

Find out more by following our link below!